Category: English

-

AutoAgent: An Open-Source Framework for Natural-Language LLM Agents

AutoAgent is an open-source framework from the Data Science and AI Laboratory, The University of Hong Kong (HKU-DSAI Lab).It was introduced in the paper AutoAgent: A Fully-Automated and Zero-Code Framework for LLM Agents (arXiv:2502.05957) by Jiabin Tang, Tianyu Fan, and Chao Huang. This project enables researchers and engineers to create and deploy large-language-model (LLM) agents…

-

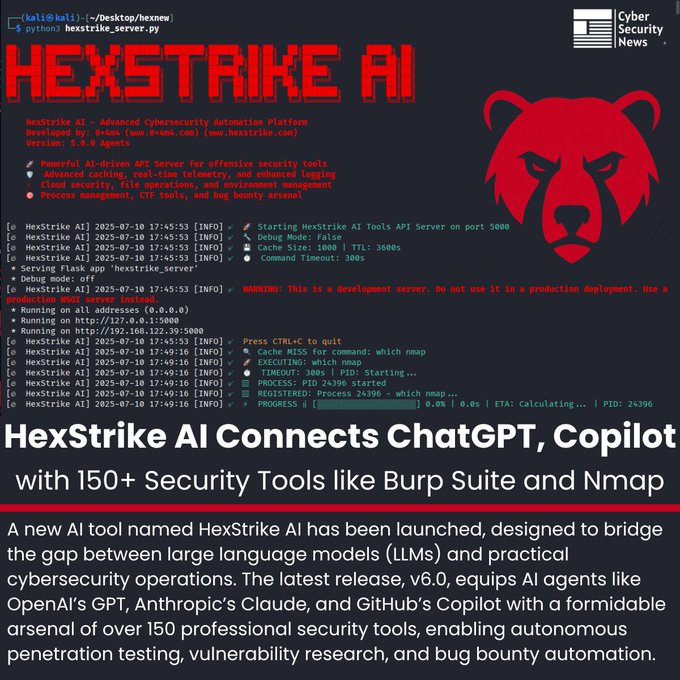

HexStrike AI v6.0: when artificial intelligence connects to the heart of cybersecurity 🔐🤖

The line between human and automated cybersecurity is becoming increasingly thin. With the release of HexStrike AI v6.0, language models such as ChatGPT, Claude, or Copilot are no longer just textual assistants but true operators capable of carrying out penetration tests from start to finish. The secret lies in its architecture: HexStrike works as an…

-

🤖 GPT-5: Between Promise and Reality

OpenAI unveiled GPT-5 as its most advanced model to date. Wrapped in the glow of marketing , GPT-5 was introduced with headlines calling it a leap toward Artificial General Intelligence (AGI). Yet once the announcements fade and real-world testing begins, the picture is less epic: yes, there are tangible improvements , but also setbacks and…

-

🧠 Google Opal: building AI-powered apps without writing code is now possible

In a new experiment that seems to anticipate the direction of creative computing, Google has introduced Opal, a tool that allows users to build small AI-driven applications using only natural language. No programming knowledge is required. No installation needed. You just describe what you want to achieve, and the system does the rest. Opal is…

-

Apple challenges AI’s “quirks”: Do large language models truly reason?

Just days before WWDC 2025, Apple took an unusual step: instead of announcing new features, it published a study titled “The Illusion of Thinking”, questioning whether so-called “reasoning models” (LRMs) can actually think through complex problems. Models from OpenAI, Anthropic—with Claude 3.7 Sonnet—DeepSeek and Google Gemini were tested on logic puzzles like the Tower of…

-

🧠 Mistral launches “Magistral”, the AI that reasons step by step — taking on OpenAI, Google and DeepSeek

Artificial intelligence is advancing rapidly, but not all approaches follow the same path. While companies like OpenAI, Anthropic and DeepSeek compete with ever-more powerful, closed models, the French startup Mistral AI is choosing a different direction: transparency, logical reasoning and native multilingualism. With the launch of Magistral, its new language model family, Mistral not only…

-

🚀 EdgeCortix brings generative AI to the edge with SAKURA-II and Raspberry Pi 5

EdgeCortix has announced that its SAKURA-II AI accelerator (M.2 format) is now compatible with a broader range of ARM-based platforms, including the Raspberry Pi 5 and Aetina’s Rockchip RK3588 platform. This compatibility expansion marks a significant step towards democratized access to advanced generative AI capabilities at the edge—combining high performance, energy efficiency, and deployment flexibility.…

-

ByteDance launches BAGEL‑7B‑MoT: a new AI model that sees, reads, and creates

ByteDance (the company behind TikTok) has introduced a new artificial intelligence model called BAGEL‑7B‑MoT, and while the name may sound complex, its purpose is clear: to combine text, images, and video into a single intelligent system that can understand and generate content as if it were “seeing” and “thinking.” What is BAGEL? BAGEL is a…

-

DeepSeek R1-0528: The Chinese Artificial Intelligence That Refuses to Be Left Behind

While the race for AI supremacy is dominated by giants like OpenAI, Google, and Anthropic, from China, the startup DeepSeek is moving forward—quietly but confidently. Its latest move: an update to its flagship model, DeepSeek R1-0528, a version that proves there are no minor players left in the AI arena. Far from being just a…

-

Claude 4: The New AI That’s Ready to Compete Hard

The world of artificial intelligence keeps moving forward at lightning speed, and now it’s Claude 4’s turn — the new model family introduced by Anthropic, a company quickly gaining ground in the AI field. With this new generation, they’re stepping up to compete directly with giants like OpenAI (ChatGPT) and Google (Gemini).Is it worth your…