Just days before WWDC 2025, Apple took an unusual step: instead of announcing new features, it published a study titled “The Illusion of Thinking”, questioning whether so-called “reasoning models” (LRMs) can actually think through complex problems. Models from OpenAI, Anthropic—with Claude 3.7 Sonnet—DeepSeek and Google Gemini were tested on logic puzzles like the Tower of Hanoi and the river crossing challenge. The results were surprising: in simple tasks, standard LLMs like GPT-4 performed better. At moderate levels, LRMs had the edge—but as complexity rose, both collapsed, with near-zero accuracy 🧠📉.

Researchers noticed that as tasks became more complex, LRMs reached a threshold where they reduced their “reasoning effort,” even when resources were available. They called this a “complete accuracy collapse,” where the models, instead of thinking harder, simply “gave up” before solving the puzzle.

OpenAI, Anthropic, and Google pushed back, claiming that current models are already laying the groundwork for tool-using agents capable of solving increasingly difficult problems. The observed “collapses,” they argue, are tied to safeguards meant to avoid excessively long or unstable responses 🧪🛑.

Apple’s team ensured clean data by designing puzzles with no known solutions during training. They didn’t just evaluate final answers—they analyzed the intermediate steps in reasoning, highlighting a deeper issue 🧩🧬.

This approach raises a central question: do LRMs really “think,” or do they follow learned patterns only up to a certain threshold? For some, this casts doubt on the path toward Artificial General Intelligence (AGI), suggesting we may be hitting fundamental limits.

Yet Apple’s stance is not just critical—it’s constructive. The company is calling for more scientific rigor in evaluating AI, challenging benchmarks based solely on math or coding tasks that may be biased or contaminated 🧭🔬.

What does this mean for AI’s future?

- Transparency & evaluation: Apple sets a new bar by questioning how and why we measure machine “intelligence”.

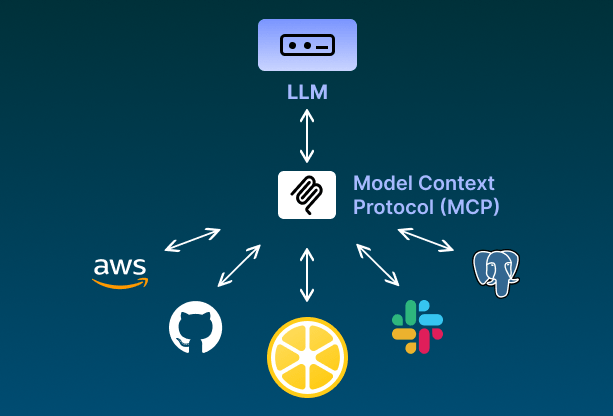

- Design vs. ability: The industry may be limiting AI more by architecture than by true potential.

- AGI roadmap: If models break down with complex reasoning, we may need to rethink how we train and structure them.

In short, Apple isn’t just criticizing—it’s proposing a new direction: toward explainable, scientifically evaluated AI that must show not just what it does, but how it thinks—or fails to

#AppleAI #AIreasoning #AGIdebate #Claude3 #GPT4 #DeepSeek #GoogleGemini #IllusionOfThinking

https://www.ctol-es.com/news/study-challenges-apple-ai-reasoning-limitations